Russian Scientists Achieve Breakthrough in Eye-Tracking Neural Interfaces and Gaze-Based Computer Control

Researchers at the Moscow State University of Psychology and Education have unveiled a machine-learning algorithm that distinguishes intentional gaze commands from spontaneous eye movements, promising unprecedented accuracy for users with disabilities and new opportunities for assistive technology markets

Innovating Eye-Control: The Technical Leap

At the heart of this development lies a hybrid analysis of microsaccades—tiny, involuntary eye movements—and real-time contextual data drawn from the application in use. Traditional gaze-tracking systems often misinterpret random fixations or brief hesitations as input, leading to erroneous commands. By contrast, the new algorithm identifies statistical patterns of user behavior and underlying neurophysiological signals to filter out unintentional gaze events. This approach directly addresses the so-called “Midas effect,” where every glance inadvertently activates the interface, by differentiating between sustained, deliberate looks and spontaneous eye motions.

Key technical details retained from the original research include:

Microsaccade Analysis: Continuous monitoring of fixation stability, amplitude, and frequency of microsaccades to detect intentional pauses.Contextual Integration: Use of application-specific metadata—such as UI element positions and user intent models—to reinforce command classification.Behavioral and Neurophysiological Modeling: Algorithms trained on datasets combining eye-tracking logs with EEG patterns, enabling recognition of neural signatures associated with voluntary gaze control.

These combined models yield a significant reduction in false positive activations while maintaining responsiveness, setting a new benchmark in gaze-based human–computer interaction.

Transformative Impact for Users and Markets

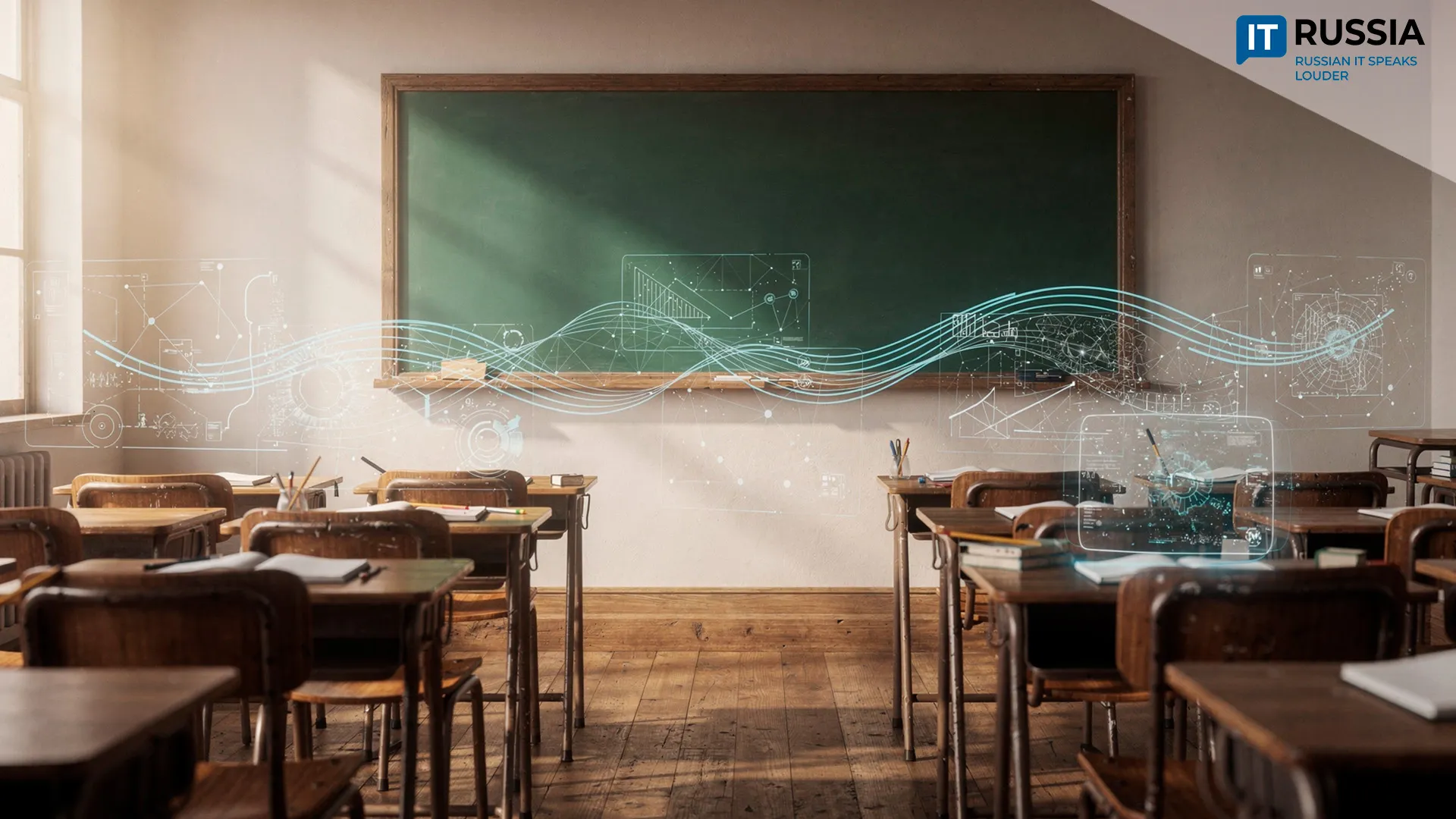

This breakthrough holds profound implications for individuals with severe motor impairments, including children with cerebral palsy and adults with total paralysis. Parents and caregivers report that existing eye-tracking solutions already enhance autonomy in communication, education, and daily tasks; improvements in accuracy will further reduce user frustration and unlock more complex applications, such as hands-free typing, gaming, and environmental control.

Moreover, the innovation fosters import substitution and positions Russia as a competitive player in the global assistive technology sector. An early software development kit (SDK) or prototype is slated for release by late 2025, targeting integration with educational platforms, medical devices, and virtual reality (VR) environments. Pilot projects in 2026–2027 will validate applications in VR/AR rehabilitation, while full commercial roll-out is projected by 2028, supported by partnerships with industry leaders such as Tobii and Varjo.

Looking Ahead: Scaling and Global Partnerships

Beyond Russia’s borders, the algorithm can be embedded in brain–computer interface (BCI) systems and augmented/virtual reality solutions to create adaptive learning, therapeutic, and rehabilitation products. Customizable models account for age-related differences and specific neurological conditions, ensuring accessibility for diverse user groups. Potential collaborations with international firms like Scope AR could accelerate export of domestically developed IT, tapping markets in Europe, the Middle East, and Southeast Asia where demand for affordable assistive technologies is rising.

Historical Progression:

2019–2020: Initial ML experiments to correct spurious gaze inputs under controlled conditions.2021: Early VR-based trials highlighting limitations outside pre-defined templates.2022–2023: Industry adoption of ML in eye-tracking hardware, with software capabilities lagging.2024: Integration of advanced gaze systems in rehabilitation complexes.2025: Launch of the combined microsaccade–contextual algorithm, marking a paradigm shift in precision.