Russia Moves to Establish AI Legislation

Russia is preparing a legal framework for the use of artificial intelligence, aiming to balance innovation with safeguards for authors, developers, and society at large.

New Legislation

The state development corporation VEB.RF has announced plans to work with leading universities—including Skoltech, the Moscow School of Management Skolkovo, and Moscow State University—on shaping AI regulation. Officials say current laws fail to meet the growing needs of society and business.

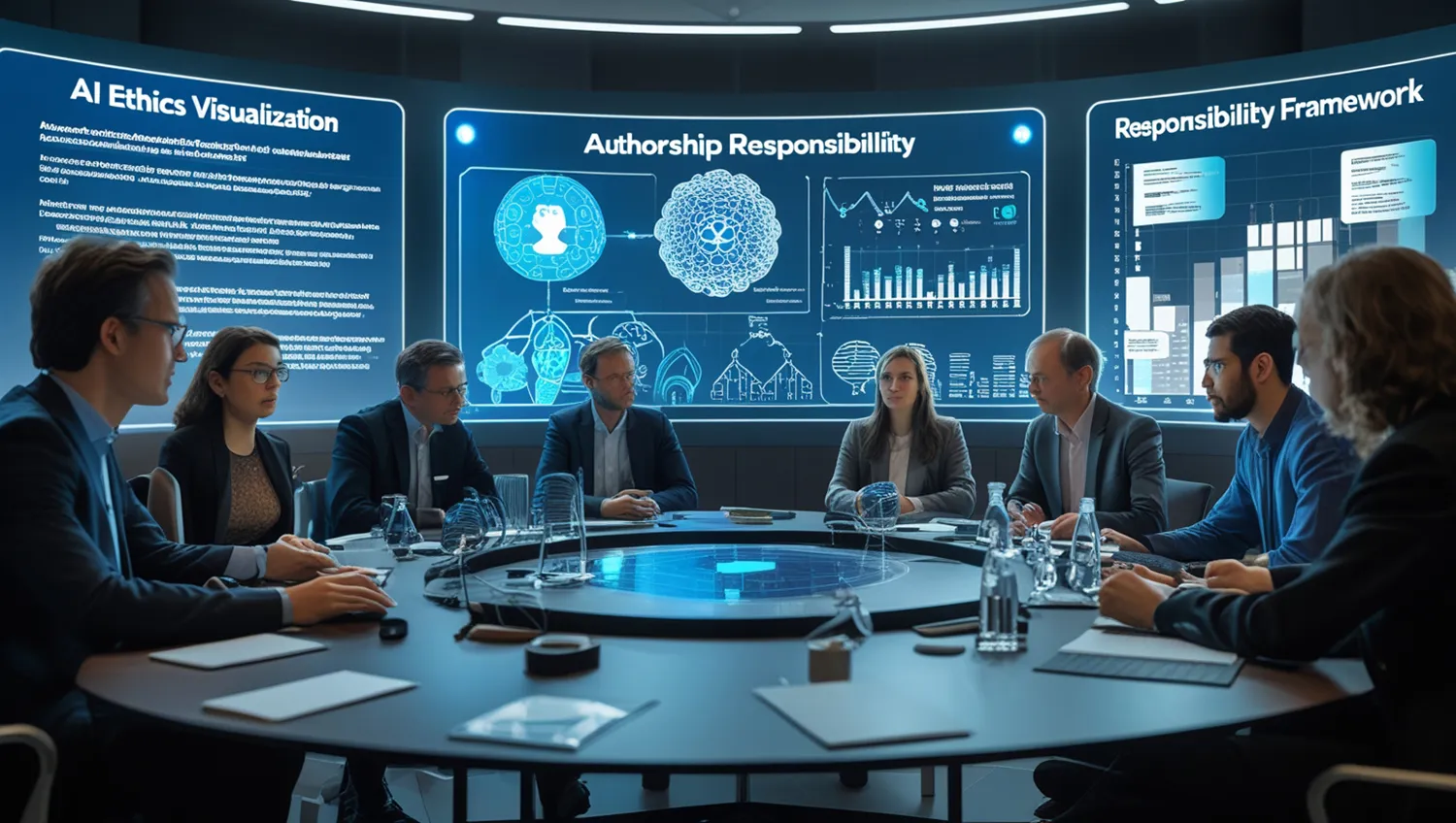

Key issues under discussion include copyright protection for cultural products created with AI, the liability of AI developers and content creators to the public, preserving individual creativity, and the risks tied to automating artistic processes. In practice, the initiative could lead not only to new laws but also to changes in Russian legal practice around AI, ethics, authorship, and accountability.

Public Demand

By creating a regulatory foundation for the IT sector, lawmakers hope to give developers, companies, universities, and cultural leaders clear and transparent rules for future work. These regulations would also strengthen public trust in AI while reducing the risk of misuse. This move directly responds to society’s growing demand for fairness, transparency, and protection of content authors.

Universities and the academic sector could become centers for drafting new norms, expert opinions, and methodologies for evaluating AI’s influence on culture and education. Russia may also introduce certification systems for AI, including checks for cultural appropriateness in algorithms. This approach could also boost international confidence in Russian AI solutions. If new rules align with global standards, integration and cooperation with foreign partners will be easier.

At the same time, experts caution that risks must be weighed carefully. Regulation must strike a balance between stimulating innovation and preventing abuse while considering the interests of all stakeholders. This is especially challenging given the lack of clear definitions for key concepts such as authorship, individuality, and liability.

Regulation Around the World

Around the world, governments are moving in the same direction. The European Union is working on its AI Act. In the United States, debates over copyright for AI-generated content are ongoing, and updates are being made to patent law. China has already introduced regulations on generative AI, including rules on data security and content moderation.

In Russia, proposals include draft laws on personal data and the liability of AI systems before the law. Universities and technology hubs are also developing their own ethical guidelines and internal frameworks. These domestic initiatives, combined with legislative work, suggest a multi-layered approach to managing AI’s societal impact.

Resolving Conflicts in AI Governance

The VEB.RF initiative signals that the state now recognizes the urgency of regulating AI as its influence grows. Ethical, cultural, and legal aspects are moving from abstract debates into codified norms and enforceable rules.

Within the next one to two years, Russia may produce policy frameworks, guidelines, and standards—or even concrete laws—governing authorship, liability, and the rights of both content creators and AI developers. Universities are expected to host interdisciplinary labs and research groups, while lawmakers may explore harmonization with international standards.

Key challenges remain: drawing the line between creativity and automated generation, securing copyright protection, and establishing mechanisms for liability when AI is misused. These are the conflicts that Russia aims to resolve through its new legal architecture.