Russian Video Conferencing Platforms to Get Deepfake Detection Upgrade

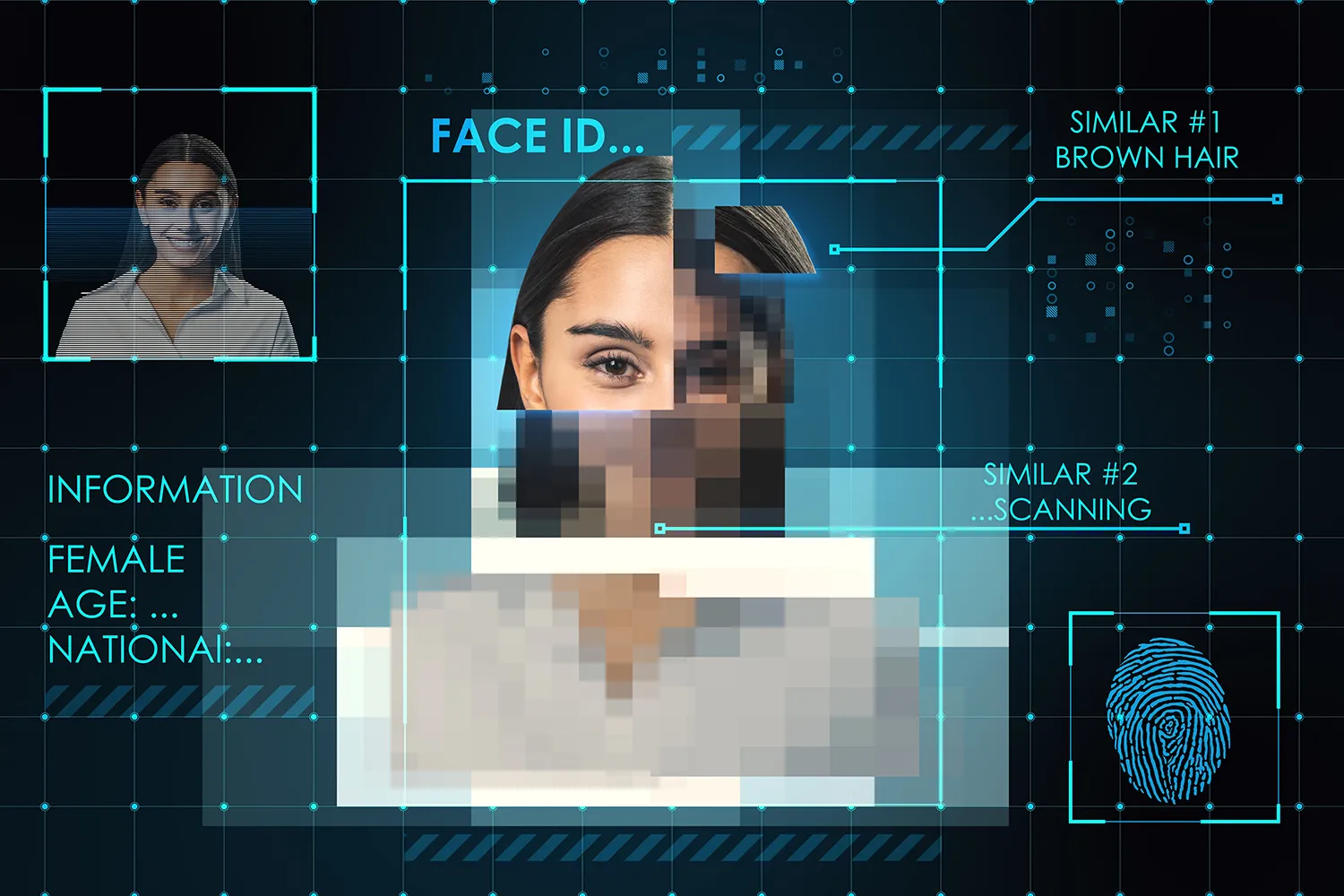

Russian tech firms are rolling out real-time AI-powered tools to protect video conferencing platforms from synthetic identity fraud and deepfake threats.

AI in Action Across Platforms

Russian developers are close to completing software-based detectors capable of identifying video streams generated by neural networks. These tools are built on artificial intelligence and machine learning models specifically trained to detect anomalies in real-time video communications.

The algorithms monitor lip-sync mismatches, unnatural voice patterns, and other indicators of synthetic content. The systems can quickly determine whether a live video feed features a real person or a digitally created likeness. Current accuracy rates have reached 98%.

Several companies are developing their own deepfake detection systems. IVA Technologies, for example, is collaborating with VisionLabs, a global leader in facial recognition. "The product is designed for cloud-based video conferencing services and is especially relevant given the rise in fraud based on social engineering and identity spoofing," said IVA’s Deputy CEO Maksim Smirnov. The company is currently in the testing and refinement phase.

Meanwhile, MTS Link reports its own technology is fully tested and ready for broad deployment.

On the Brink of Deployment

The first wave of implementation could begin as early as this fall. State enterprises and financial institutions—where anti-fraud measures are critical—are expected to be the initial adopters.

Developers emphasize that these tools will not affect user experience or video quality. The detection system operates silently in the background, only intervening when a threat is detected. As Vinteo CEO Roman Samoylov explained, "Initially, this functionality will serve specific users, such as top-level executives and financial sector professionals, but it will gradually expand to broader user segments."

Global Relevance of the Russian Solution

The need for deepfake detection extends far beyond Russia. In one 2023 incident, scammers impersonated corporate leaders in a Hong Kong office using synthetic video personas. During a video call, all participants except the target were fake, leading to a $25 million fraud.

Russia has also faced such attacks. In Rostov-on-Don, fraudsters used a deepfake of the local mayor to invite people to fake meetings. A similar incident involved the mayor of Kazan, with users receiving invitations to video calls via spoofed email addresses.

As the quality of synthetic video continues to improve, visual inspection alone will no longer suffice. Experts say that only dedicated software will be able to detect forgeries—making such detectors critical for digital hygiene and cybersecurity.

A Standard, Not a Feature

In the near future, having deepfake detection may become a requirement for any video communication service. Russian developers are ahead of the curve in turning detection from an optional feature into a security standard. This positions Russia not only as a technological leader in the space but also as an early exporter of effective anti-fraud tools.

With demand rising worldwide, these tools are expected to gain traction across international markets, where synthetic identity fraud is becoming a major threat.