Russian Students Develop AI Tools to Translate Sign Language

Two student projects from Siberia are tackling accessibility, using computer vision to turn gestures into text and speech for people with hearing impairments.

In Russia, two students from Altai State Technical University (AltSTU)—Daria Funk and Danil Golotovsky—made the finals of the “Best Informatization Projects in Altai” competition with systems for automated translation of Russian Sign Language (RSL). Their work aims to improve access to education, employment, and everyday communication for people with hearing disabilities.

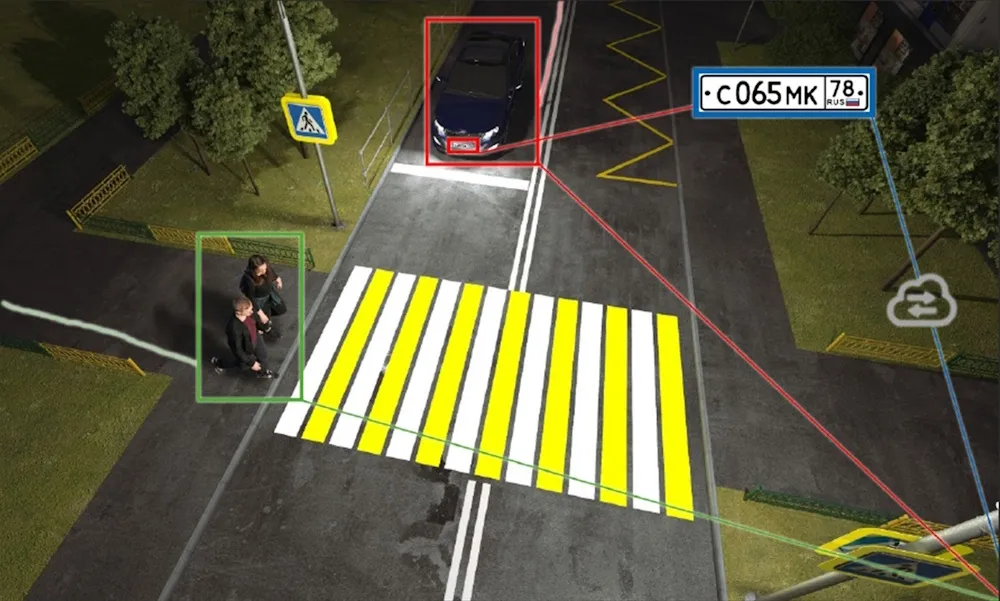

Both projects use cutting-edge artificial intelligence and computer vision. Funk’s cross-platform app translates gestures into text or speech in real time. The program analyzes video streams, pinpoints body and facial landmarks, and recognizes signs with high accuracy even in noisy conditions.

Golotovsky went further, designing an algorithm that converts not just individual signs but entire phrases into coherent text with grammar included. His system already includes a database of 1,000 signs, each with 20 variations performed by different people. Looking ahead, he plans to add reverse translation—turning text back into sign language using a 2D avatar.