White-Box AI: Russian Researchers Build Transparent, Legally Compliant System

Scientists in Russia have developed a fundamentally new artificial intelligence system that offers full transparency and traceability, providing a path toward legal compliance and human trust.

Logic-Based Cellular Automata as a Foundation

Researchers at the Institute of Theoretical and Experimental Biophysics (ITEB RAS) and the Institute of Cell Biophysics of the Russian Academy of Sciences have introduced an AI system that is entirely transparent to humans. Unlike standard AI models that function as 'black boxes,' this new approach enables clear visibility into every computational step.

The architecture is based on logic-driven cellular automata—an innovation that enables symbolic reasoning across multiple layers of system complexity, factoring in micro-level object dependencies. The underlying ontological framework is rooted in an axiomatic domain-specific theory, while the technical backbone consists of logic cell automata.

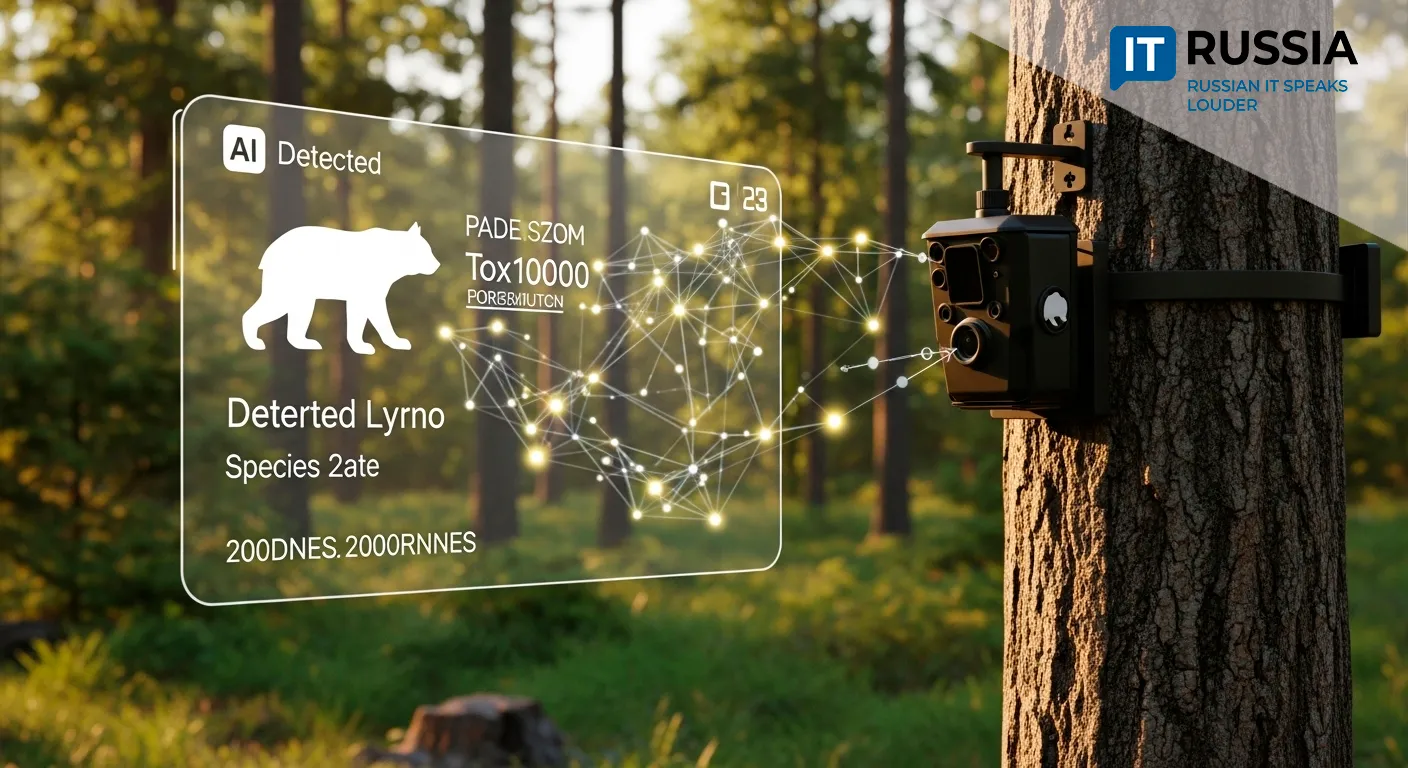

Originally designed to validate ecological hypotheses, the system now serves as the methodological basis for a broader research initiative in the development of symbolic general-purpose AI. As Lev Kalmykov of ITEB RAS puts it, “The AI performs automated logical inference at three levels of system organization. Each decision point is accessible and explainable.”

The Problem with Black-Box AI

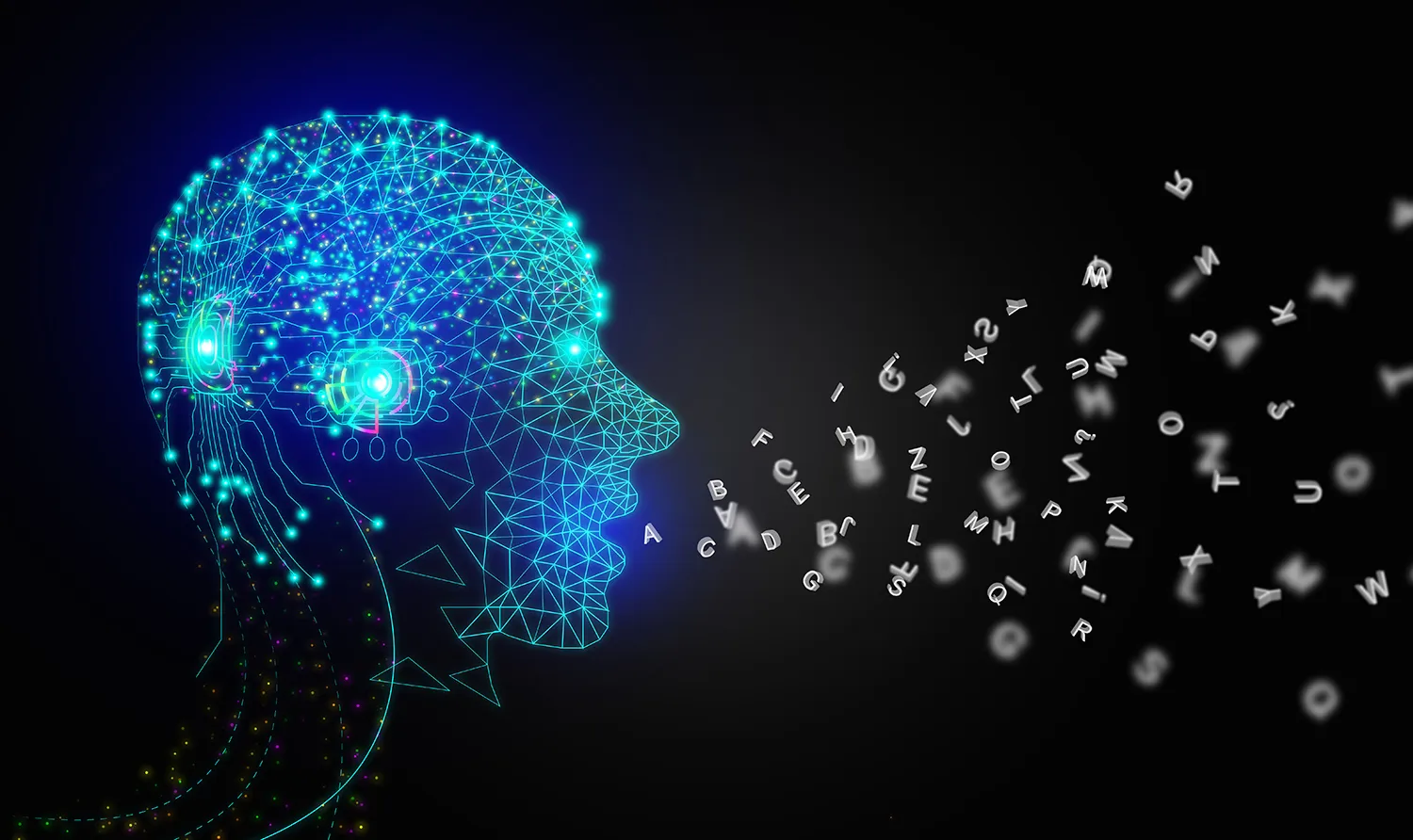

Modern machine learning systems often lack interpretability, making it nearly impossible for humans to understand how outputs are derived from inputs. The result is an opaque decision-making process that introduces risks of unintended behavior and complicates error correction.

This issue led to the U.S. launch of the Explainable AI (XAI) program in 2016. While XAI made strides in interpreting neural networks, it did not address their statistical opacity or the inherent unpredictability of their decisions.

Russia’s new symbolic AI model—branded as “eXplicitly eXplainable Artificial Intelligence (XXAI)”—addresses the core flaw of black-box systems. It is designed to deliver full traceability of reasoning, enabling safe and reliable applications.

Legal Compliance and Societal Trust

The ability to interpret AI decisions isn’t just a technical upgrade—it’s a legal and ethical necessity. Transparent AI helps organizations assess whether biases exist in training data or logic flows, track down failures, and iteratively improve performance.

This approach is especially relevant under the European Union’s Artificial Intelligence Act, adopted in March 2024 and approved by the EU Council in May 2024. The law requires strict oversight of high-risk systems, particularly those involving biometric data, access to essential services, or critical infrastructure.

Without interpretability, such oversight is impossible. Russia’s symbolic AI offers not only regulatory compliance, but also the foundation for hybrid AI systems that combine white-box transparency with the computational power of black-box models.

In the long term, this innovation could gain international traction, particularly in mission-critical applications where safety and auditability are paramount.