The State Duma Proposes Labeling AI-Generated Content in Election Campaigns

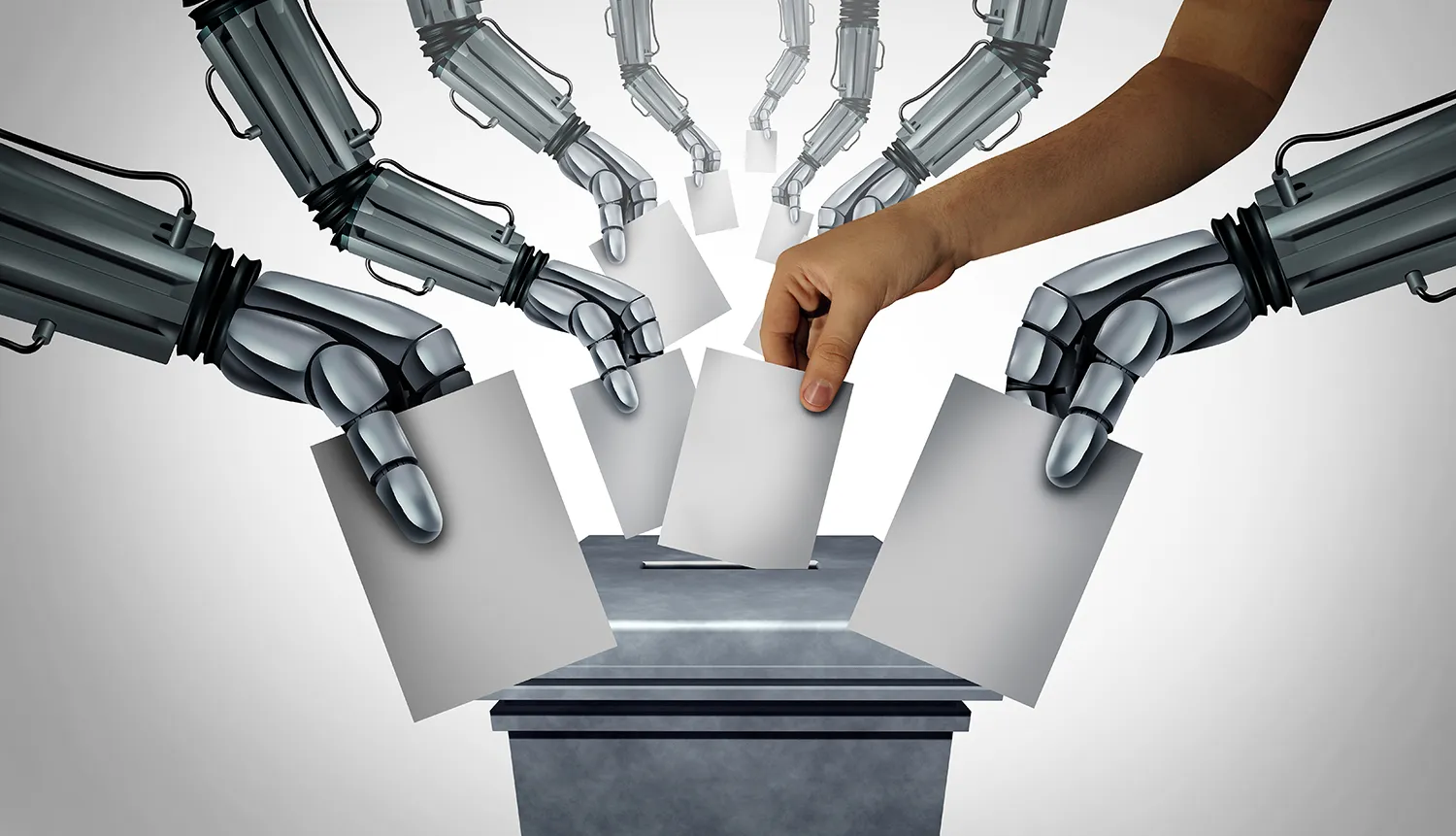

Russia is moving to regulate the use of artificial intelligence in political campaigning by requiring that voters be informed when videos, images, or other materials are created with neural networks. Lawmakers argue this will protect citizens from deepfakes and manipulative technologies.

Voters Should Know About AI

In the State Duma, lawmakers have proposed labeling campaign materials created through AI generation, including those made with neural networks. The initiative comes from Anton Gorelkin, First Deputy Chair of the Committee on Information Policy. He believes voters must be aware when a candidate uses AI to produce campaign videos. Russia’s Central Election Commission (CEC) supports this view and stresses that the labels should be clear and visible so voters cannot miss that the content was AI-generated.

The idea is seen as timely. Although AI technologies are widely adopted, their use in elections is still not regulated by law. This leaves room for risks such as deepfakes, forged statements—including falsified official communications—and other manipulations. The absence of legislation has also created technical and legal gaps: who should label the content, how compliance should be monitored, and what penalties should apply in case of violations.

The issue affects the entire electorate. For example, during the 2025 Single Voting Day, 81 Russian regions are involved, holding direct gubernatorial elections, legislative assembly races, and other electoral procedures.

Labeling for Export

Draft bills and regulatory concepts for labeling AI content, including deepfakes and synthetic media, already exist. Lawmakers are also discussing codifying terms like “artificial intelligence,” “synthetic content,” and “deepfake.” As for the labeling itself, the consensus is that it should be easy to see—not hidden in small print—and may include watermarks or graphic markers. It will also be necessary to assign responsibility, set up monitoring systems, and define enforcement mechanisms.

However, risks remain. Fraudsters are unlikely to voluntarily label their materials, so marking alone may not be sufficient. Resistance is also possible from technology companies, media outlets, and candidates. Complying with such laws could mean added costs, technical complications, and slower campaign operations.

Globally, other countries are already moving in this direction. The European Union, for example, has adopted its AI Act, which includes transparency and labeling requirements. If Russia’s legislation proves clear, enforceable, and equipped with effective detection technologies, it could serve as a regulatory export model.

Government Branch Discussions

The first proposals to label AI-generated content and establish a legal framework appeared back in 2024, when the issue was discussed in the State Duma. Initiatives came not only from individual lawmakers but also from the Ministry of Digital Development and Roskomnadzor.

In 2025, CEC Chair Ella Pamfilova called for legislative regulation of AI in elections, highlighting the risks of fake content swaying public opinion. Current discussions in the State Duma and federal agencies extend beyond labeling and address accountability for publishers distributing deepfakes without consent. Some of these proposals are likely to form the basis of a new law, although a complete draft has not yet been introduced.

Digitalizing Elections

The labeling initiative fits into the broader context of digitalization and the risks of AI in politics. If implemented clearly and backed by enforcement, AI labeling could help build voter trust and increase transparency. Still, labeling alone will not solve the problem. Effective protections against manipulation, technological monitoring tools, and real accountability measures will also be needed.

It is expected that within one to two years, either a new bill will be adopted or the existing regulatory base will expand. During this time, lawmakers are likely to finalize definitions, set labeling standards, and introduce penalties. In the longer term, courts may issue rulings on violations, and automated tools for detecting deepfakes could be deployed. Over time, AI regulation in elections will likely become part of a broader system of AI governance, including restrictions on specific types of AI generation.