Russia Deploys Deepfake Detectors to Combat Cybercrime

New AI-powered systems are helping Russian banks and law enforcement identify synthetic voices and fake videos used in scams.

Russia is rolling out digital tools to detect deepfakes—AI-generated imitations of real people often used in fraud. Cybercriminals exploit synthetic images, voices, and videos to impersonate victims, sending urgent messages or financial requests via hacked accounts. These are among the milder schemes, but they point to a broader threat.

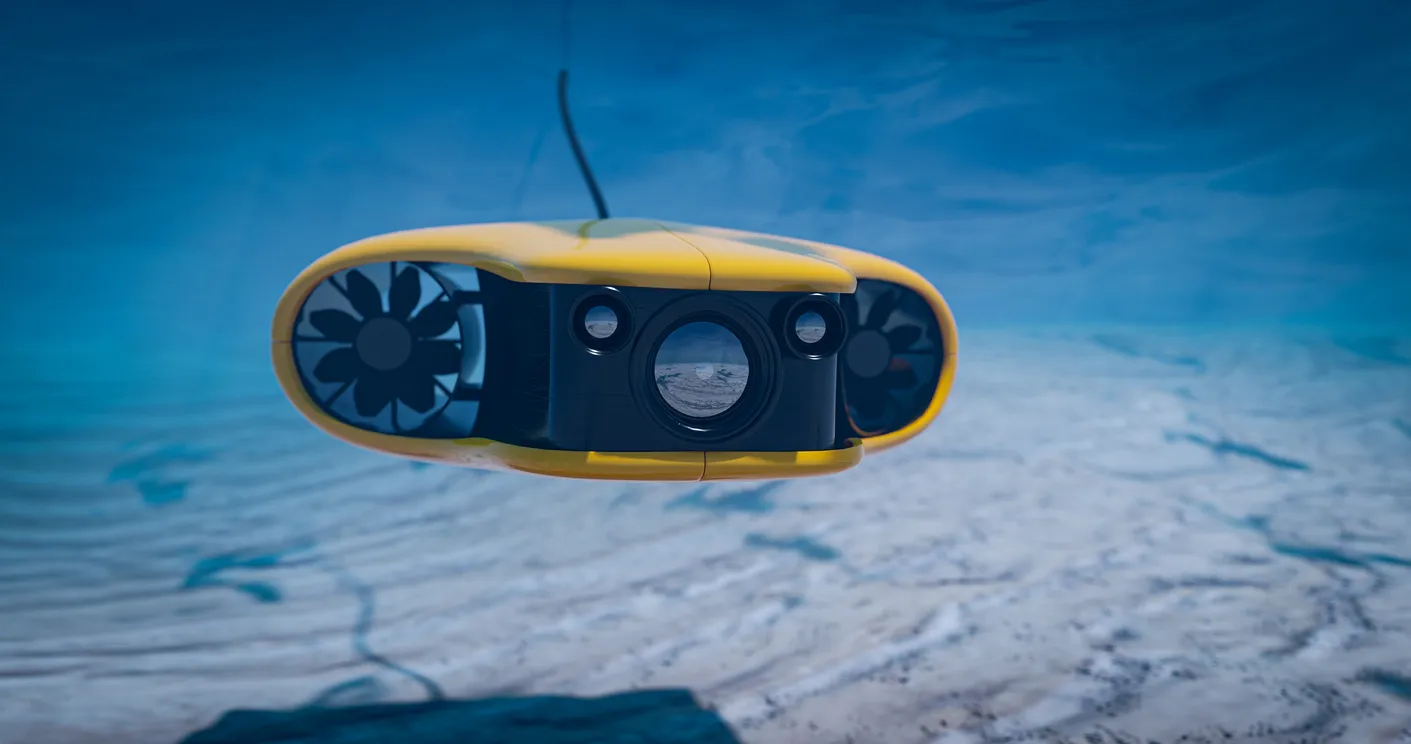

Specialized detectors, now being integrated primarily into banking systems, are capable of spotting manipulated photos, video, and audio. According to MLTimes, around 30 major projects have already adopted the technology. Developers say their systems can even identify which neural network tools were used to create the fake.

Unlike human investigators—who often struggle to distinguish between real and synthetic media—the AI detectors offer high precision. As deepfake creation becomes faster and cheaper, the need for advanced protection tools is growing.

In parallel, lawmakers are working to strengthen legal frameworks. Soon, the use of deepfakes in cybercrimes may be classified as an aggravating circumstance under Russian law.