Russian Researchers Develop a Faster, Leaner Way to Train Artificial Intelligence

A new method from T-Bank AI Research dramatically cuts the memory and time needed to retrain AI models — while keeping their reasoning sharp and efficient.

Smarter Training, Smaller Footprint

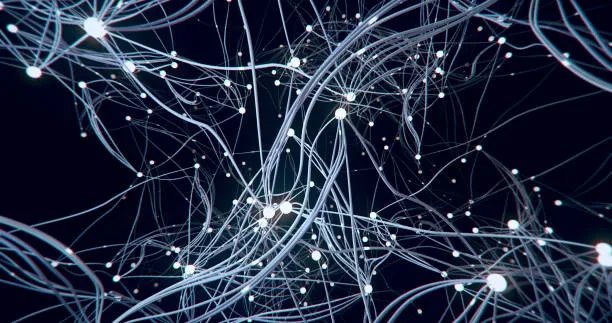

Researchers at Russia’s T-Bank AI Research lab have unveiled a method that improves the reasoning capabilities of large language models without the need for costly retraining. Instead of reprocessing billions of parameters, scientists add compact “tuning vectors” — lightweight modules that fine-tune an existing neural network and enhance its logical performance.

Efficiency Without Sacrifice

The approach slashes computational demands: instead of gigabytes of memory, it requires only a few hundred kilobytes, and the fine-tuning process is completed several times faster. Despite the compression, output quality remains intact — models retain their accuracy while becoming more adaptable and energy-efficient.

The new system has already been tested on models such as Qwen2.5 and LLaMA3, both of which are used for solving logical and mathematical problems. The researchers also discovered that the method makes AI’s reasoning process more transparent — offering new insights into how neural networks arrive at conclusions.

Toward Lightweight AI Models

The innovation could pave the way for “lightweight” AI systems that businesses and researchers can deploy without expensive computing infrastructure. It’s a step toward sustainable, efficient, and more accessible artificial intelligence — one where reasoning power no longer demands massive data centers.