The Limits of What Is Acceptable: AI Ethics or a Conversation That Cannot Be Postponed

As artificial intelligence becomes more convenient and more widespread, it also raises a new set of questions: Who is responsible for algorithmic errors? How are recommendations formed? And can a system be trusted without understanding its limits?

Not Outside Morality

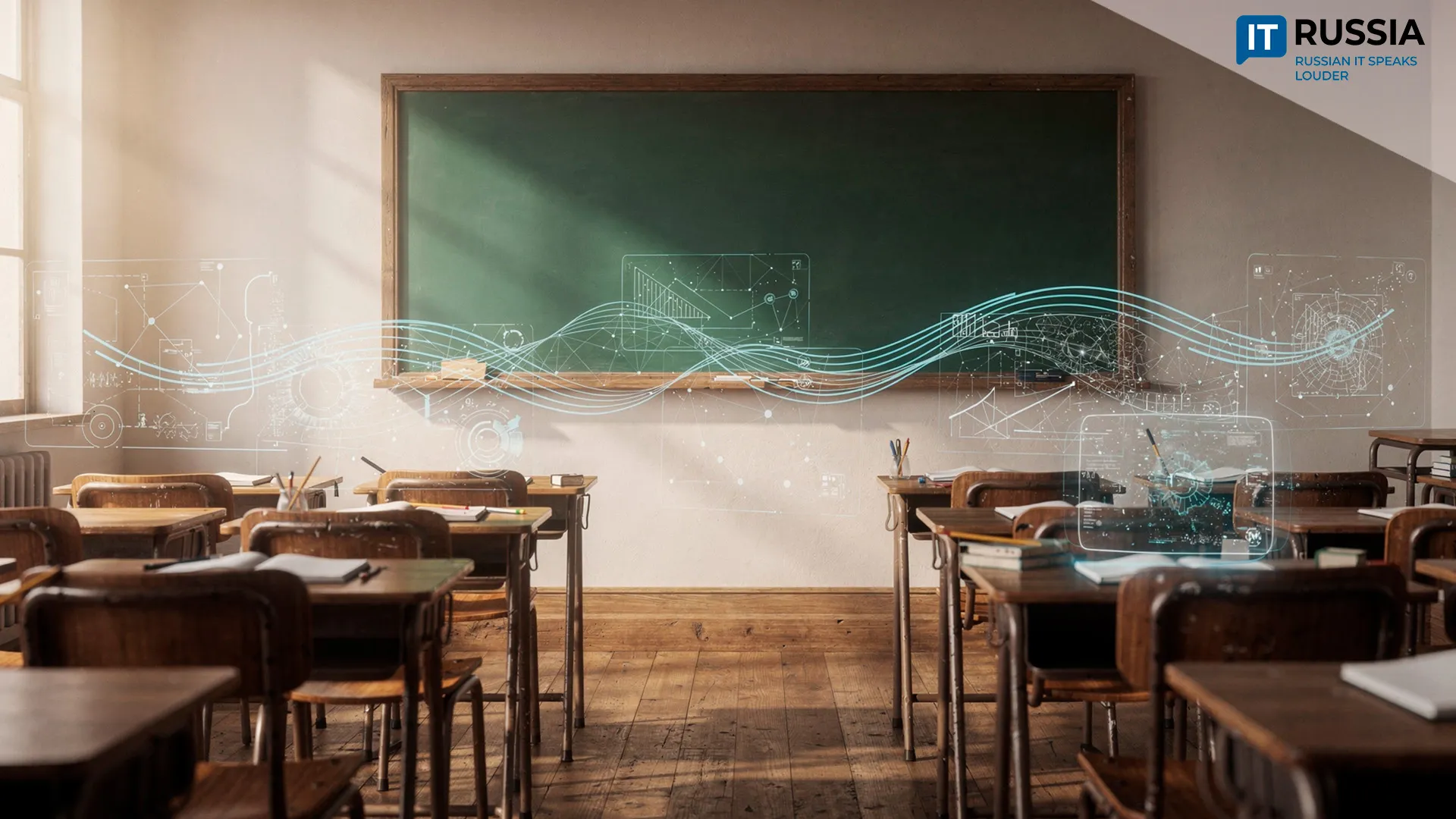

AI is increasingly entering the educational environment, from automated grading to personalized recommendations for students. As a result, the issue of responsible AI use is moving beyond a purely technical debate and becoming part of the educational agenda.

“Soft law should be used more broadly, first and foremost meaning a code of ethics in the field of artificial intelligence,” said Vladimir Putin, sharing his view.

Today, there is no single, once-and-for-all approved code of ethics for AI. The AI field is evolving faster than governments, businesses and experts can reach consensus. Instead of universal rules, dozens or even hundreds of separate guidelines are emerging. They are developed by companies, public agencies and international organizations, often involving specialists in ethics, law and technology. These documents differ in details but converge on one core principle: AI cannot exist “outside morality.”

At the Crossroads of Algorithms and Responsibility

At the heart of most ethical frameworks lies fairness. Algorithms are expected to treat all people equally. Yet AI systems are trained on historical data, which often contain bias. Without oversight, AI does not eliminate inequality, it amplifies it.

The second principle is transparency and explainability. Users and regulators need to understand how AI systems reach decisions. A “black box” that influences people’s lives, whether in lending, hiring or healthcare, represents a serious ethical risk.

Accountability is no less important. If an AI system causes harm, responsibility must be clearly defined. Otherwise, the algorithm becomes a convenient excuse for human errors.

The risks are already tangible: biased outcomes, deepfakes, disinformation and pressure on labor markets. AI accelerates processes, but without supervision it just as quickly scales mistakes.

A Candid Conversation

“I doubt that local bans and restrictions will have much effect. What really matters is cultivating critical thinking and discussing models of how to build intellectual defenses,” believes Noam Chomsky, a 96-year-old professor of linguistics at the Massachusetts Institute of Technology. Responding to public demand, specialized educational programs have emerged. Among the most widely known is the Elements of AI course, developed by the University of Helsinki with support from Finnish research centers, where a dedicated module focuses on the social and ethical aspects of AI.

On the Coursera platform, courses such as AI Ethics and Ethics of Artificial Intelligence are offered by universities and research institutes. They examine algorithmic bias, decision transparency and developer responsibility.

UNESCO also provides educational materials and training programs for educators based on its AI ethics recommendations, with certificates issued in Russian and English. These programs are designed not only for specialists but also for teachers and parents who need to understand how AI works without learning to code.

The Right to Intervene

AI ethics today is an attempt to maintain a balance between the benefits of technology and its impact on society. While there is still no universal AI ethics code, governments are beginning to establish legal frameworks. In Russia, a Code of Ethics in AI is in force as a voluntary, nonbinding document that organizations can choose to adopt. In the European Union, the AI Act came into force in August 2024, classifying AI systems by risk level and imposing mandatory requirements on the most sensitive applications.

Beyond critical thinking, AI ethics in education is closely linked to cybersecurity and digital hygiene. AI systems operate on massive datasets, often containing personal information. Data leaks, breaches and unauthorized access become not just technical failures but ethical breakdowns that undermine trust in technology as a whole.

This is why a growing number of experts agree on one point: human oversight is mandatory. Even the most advanced systems should not operate fully autonomously in sensitive areas. Humans must retain the right to intervene, pause or correct AI systems when necessary.