MEPhI Researchers Develop a More Effective Way to Suppress Noise in Digital Holography

Digital holography is nowadays used across a wide range of fields, from medical diagnostics to non-destructive testing of materials and industrial components.

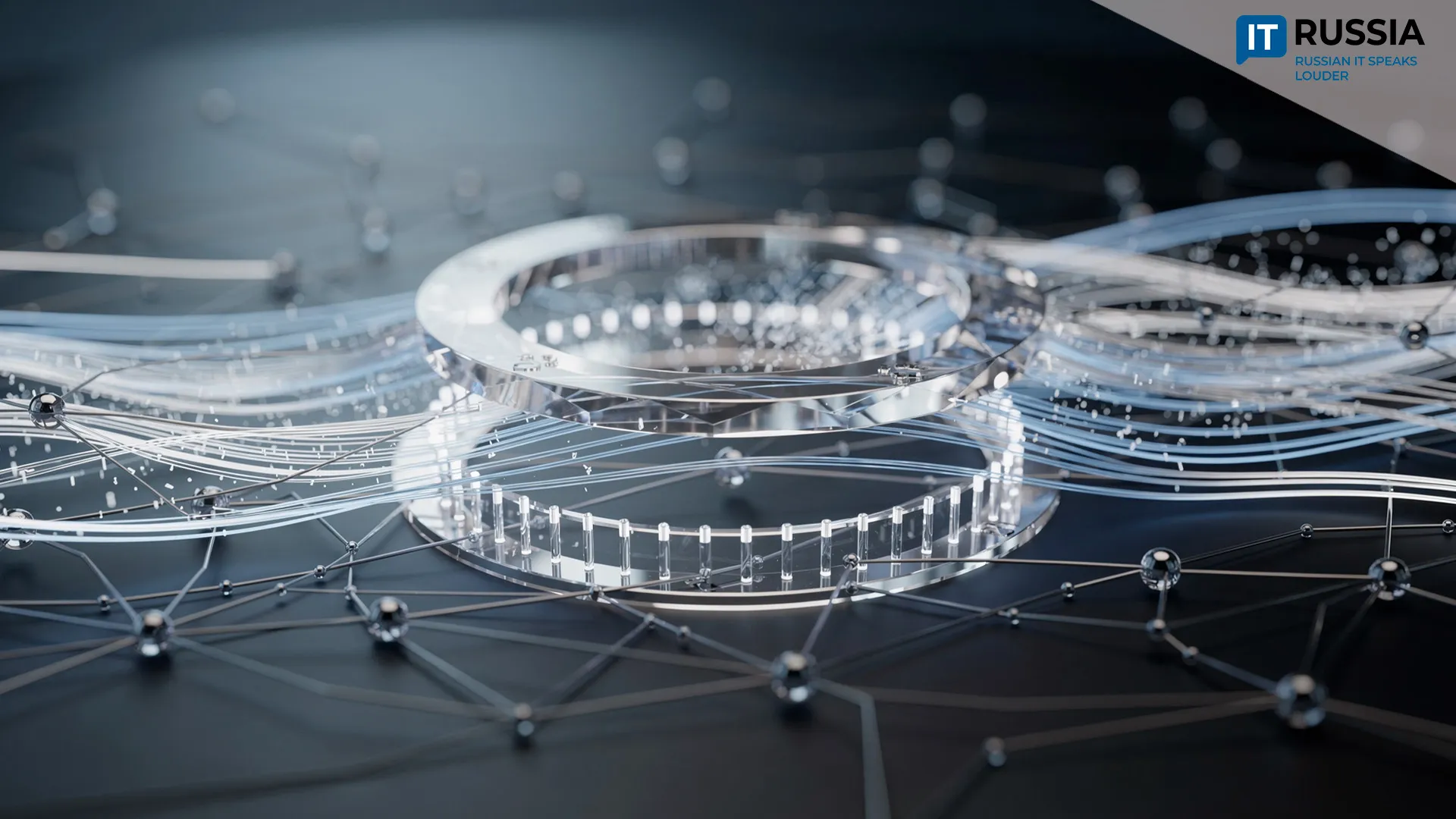

One of the main challenges in processing data from holographic systems is the high level of noise present in images reconstructed from holograms and in cross-sections of three-dimensional scenes. The dominant type of interference is speckle noise – a granular pattern caused by the use of laser radiation during hologram recording. It appears as fine grain across reconstructed images, degrading smooth and gradually changing features. Speckle noise obscures important object details, reduces image contrast, and complicates accurate data analysis.

Traditionally, this graininess in digital holography is addressed using computer-based filters – specialized algorithms designed to correct distortions. In practice, however, most of these algorithms either ignore or only weakly account for local statistical variations in image brightness. As a result, noise suppression often comes at the cost of reduced detail in reconstructed images.

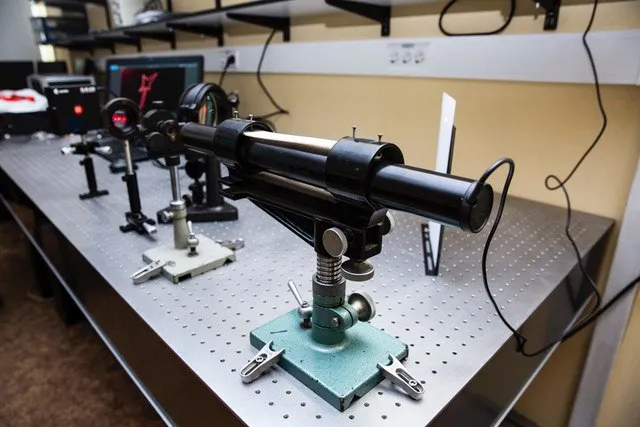

Researchers from the Laboratory of Photonics and Optical Information Processing at the Institute of Laser and Plasma Technologies, part of the National Research Nuclear University MEPhI, have proposed a fundamentally different approach. Instead of processing individual images, their method operates on an entire series of photographs – digital holograms of the same object recorded by a camera.

The approach is based on a simple physical principle. In each new frame, the speckle pattern is distributed randomly and differs from frame to frame, while the object itself remains unchanged. All images are combined into a single three-dimensional data array. The algorithm then analyzes this stack to determine where random noise is present – varying across frames – and where real object features appear consistently. Thus, noise is suppressed while a single final image is formed with substantially higher clarity and detail.

Performance testing shows that the method significantly outperforms widely used state-of-the-art algorithms such as BM3D and BM4D. Thus, it suppresses graininess 30–40 percent more effectively and preserves the accuracy of fine details by up to 50 percent compared with existing approaches.

The technology has broad potential in areas that require precise visualization or high-quality characterization of two- and three-dimensional objects. In medicine, it could enable the examination of fine cellular and tissue structures for more accurate diagnostics. In industry, it could be used for microchip quality control and for detecting microscopic surface defects. In nano- and microscopy, the approach promises highly detailed imaging. In addition, the method could improve the quality and speed of holographic systems used for information encoding and protection.

The study demonstrates how combining laser-based opto-digital systems with advanced computational algorithms can solve a long-standing technical problem. This, in turn, opens new opportunities for creating more accurate and informative visualization systems in science, healthcare, and industry – with tangible benefits for both researchers and end users.