Russia Trains Neural Networks to Learn 40% Faster

Russian developers have unveiled a new neural-network training method that nearly halves learning time while reducing hardware requirements.

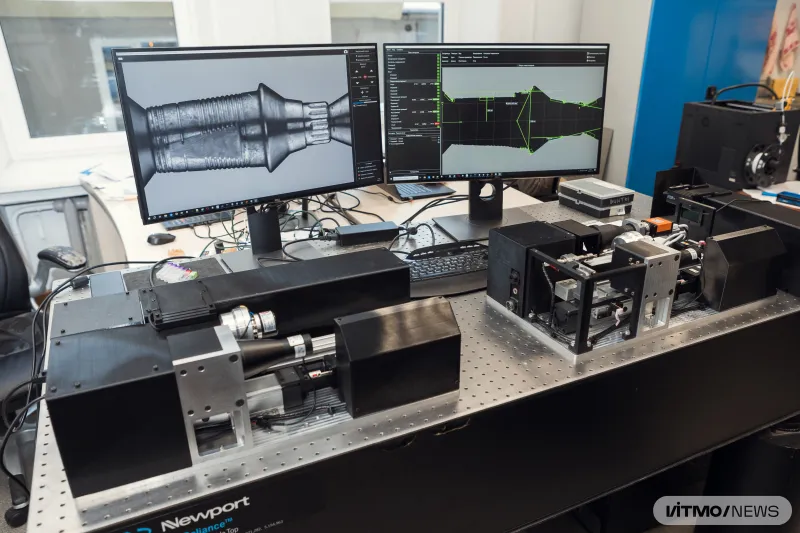

Researchers at the Russian company Smart Engines have developed a technology that makes neural networks run about 40 percent faster than existing models. In practical terms, AI systems can process data and deliver validated results much more quickly, without relying on expensive, power-hungry high-end hardware.

Rounding Without Losing Quality

Vladimir Arlazarov, CEO of Smart Engines and a Doctor of Technical Sciences, explained the core idea behind the breakthrough. The team proposed a new quantization scheme that reduces model size and complexity while preserving recognition and analysis quality.

In conventional neural networks, millions of fractional numbers define how text, images, or speech are processed. Computers store these values in binary form, and the more bits allocated to each number, the higher the precision — but also the greater the memory and compute cost. The standard format typically uses 32 bits. The new approach applies quantization to encode the same values more compactly, using fewer bits. As a result, data is read faster and the model runs significantly quicker with almost no loss in accuracy.

Smart Engines adopted an unconventional scheme in which the model operates with data equivalent to 4.6 bits instead of the standard 8 bits. Quality is preserved because the system tolerates small errors, while compression is designed to keep the most important data precise. As a result, the simplified models can run on virtually any laptop or smartphone.

More Than Just a Number

A 40 percent speedup means that complex tasks — such as object recognition in images or text processing — can be performed faster and at lower cost. Smart Engines is already using the technology in its software products for document and image recognition.

The invention could reshape approaches to AI development. Complex models such as generative networks or large language models, which are trained on billions of data points and require massive computing resources, have traditionally depended on powerful servers. By lowering hardware demands, AI could move closer to users — running directly on phones or tablets.

Most importantly, the development addresses a practical bottleneck. A large share of computing resources is consumed during the training and adaptation of AI models. Improving algorithms in this way offers a path to faster AI without increasing costs or energy consumption.